Categorization of Product Reviews using LLM Models

With Meta releasing Llama 3 as an open source LLM recently, it prompted me to explore the capabilities of this new state-of-the-art LLM. An interesting use case that I wanted to test it on was the categorization of product reviews. Allowing brands and retailers to know what their consumers think of their products without having to go through thousands of reviews for all of the products on their catalogue.

Step One: Installation.

We need to run Llama 3 and for this we are going to use Ollama (download link), a framework that allows users to run LLMs locally on their computer. We will also install the corresponding python library with the following command: pip install ollama.

Step Two: Extracting Product Reviews.

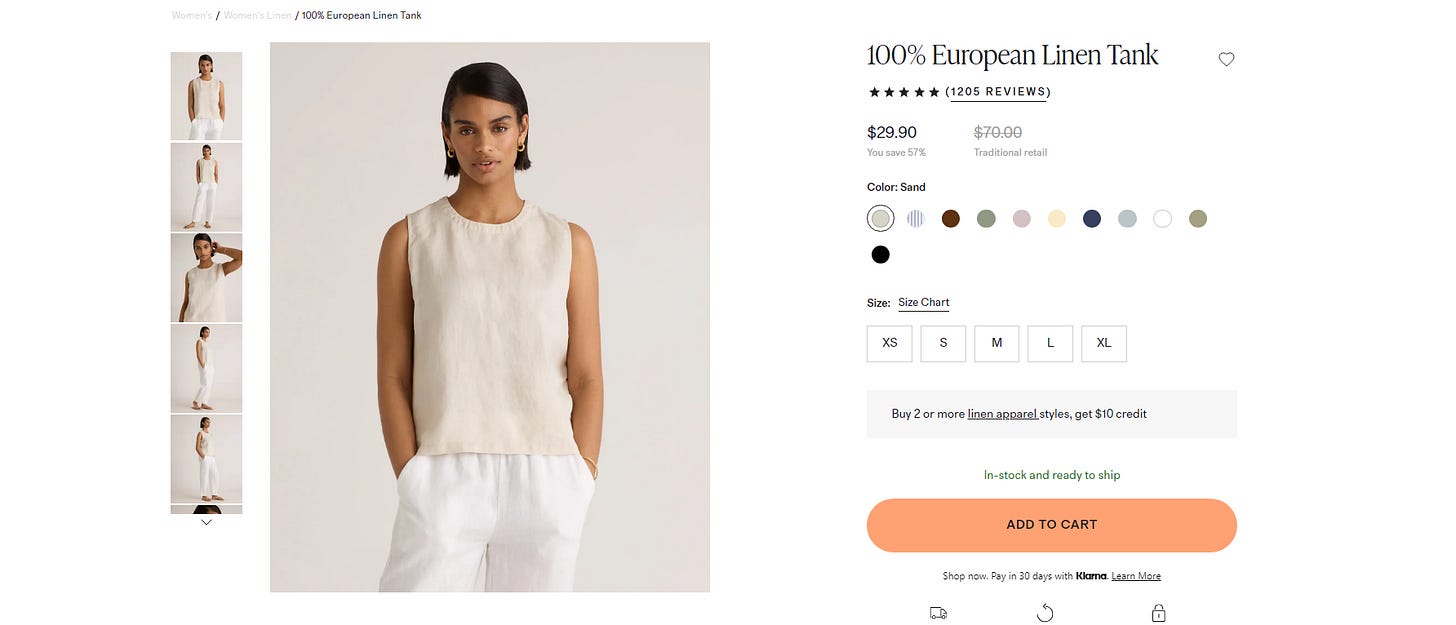

For the sake of this example, let’s extract product reviews from an item on the ecommerce website Quince, the luxury brand that differentiates itself with its incredibly low prices.

We will be using the selenium library on Python to scrape all the reviews of this product in the following dictionary format:

{'review_id': 0,

'rating': 5,

'title': 'Comfy and flattering',

'message': 'I love this too because it’s comfortable AND flattering, which can be hard to find. It’s perfect when I’m in the mood to wear something loose and flowy.'}Side note: The main difficulty here was the pagination system of the reviews on the product page. In fact, clicking on the next reviews’ page would dynamically display the following reviews while remaining on the same page, preventing us from using requests and beautiful soup and having to mimic user activity on the site to access the information.

Step Three: Data Manipulation

Once we have compiled all of the reviews, our goal is to filter them to see the ones with a rating <= 3. The idea is to isolate the negative reviews to feed them to the LLM.

Step Four: Configuring and Using LLM

The model has been given the following prompt for context around what we are trying to achieve and the desired output:

You will be provided with product reviews from an e-commerce luxury brand in the following format: review_id, rating, title, message. Your goal will be to extract one, two or three main theme(s) that the user who wrote the review thinks are negative about the product. Provide the output in the following list format: ["theme 1", "theme 2"] or ["theme 1", "theme 2", "theme 3"]. In the case where there is nothing negative to report just return an empty list: [""]. Keep the themes to one word".

I believe that this is one of the trickiest parts of this project. Framing the prompt in such a way that the model provides exactly what is expected had to be done in multiple iterations to avoid long sentences, blocks of texts, varying formats, etc.

Step Five: Data Visualization.

Once the reviews were categorized in different themes, the idea was to determine what the most recurring negative experience of customers stemmed from. To do this, an interesting chart type is the word cloud, set at a maximum of 10 words to get the most recurring and avoid rare issues or outliers.

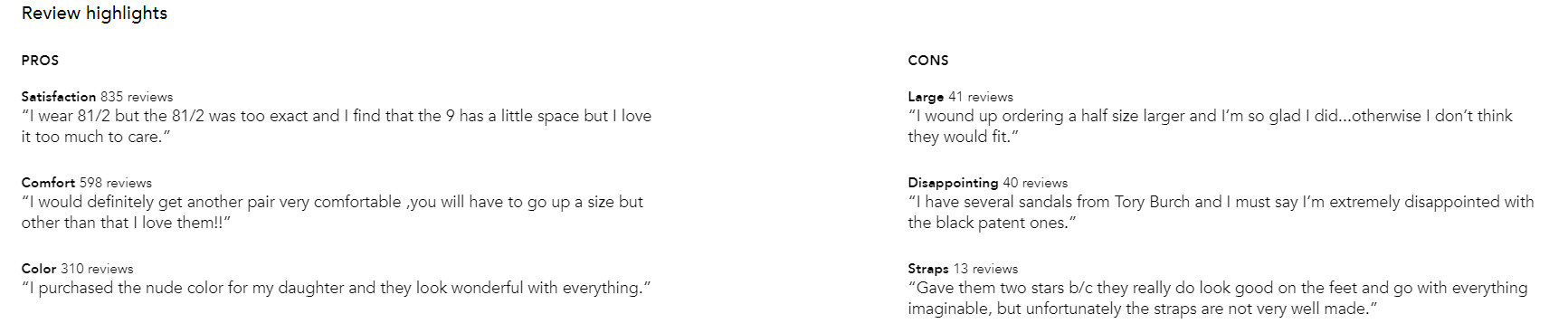

Having this sort of visualization per product on a catalogue would be a major time-saver for brands who could not possibly go through thousands of comments. It would allow brand owners and retailers alike to be informed of the recurring concerns of their customers and improve their products accordingly. However, it is also important to visualize the good reviews as well to understand the perceived strengths of your products. Bloomingdale does that directly on their product page, showing 3 pros and 3 cons for each products with the number of reviews mentioning them.

Conclusion:

Product reviews categorization is important for brands to “hear” their customers’ voices more clearly. This model could definitely be improved especially on the prompt sent to the model’s system however, it gives us an interesting peek into the potential use cases of LLMs for ecommerce brands.

Github for the Jupyter Notebook: https://github.com/younesrifai